Artificial intelligence (AI) has grown to become more mature due to aggressive development by related players over the past few years and is now able to support more applications including autonomous driving, robots and voice assistant platforms. NVIDIA has been a pioneer of AI technology development and its expertise on GPU parallel computing also makes the company one of the top suppliers of deep learning hardware.

GPUs originally served only as an engine for stimulating human imagination, conjuring up virtual worlds in video games and Hollywood films, but after over 20 years of development since 1999, NVIDIA's GPUs are now able to simulate human intelligence, running deep learning algorithms and acting as the brain of computers, robots, and self-driving cars that can perceive and understand the world.

When asked about what is the definition of AI and what AI is capable of doing, NVIDIA general manager and vice president of Accelerated Computing business unit Ian Buck said it is better to think it as a new way of doing computing. AI has grown to become more known by people throughout the past decade because of its ability to replace the traditional algorithms and instead of needing human to give specific instructions for it to process, its neural network is able to figure out the algorithms itself from data.

By giving a few million images of an object to an image network, it will be able to learn the most efficient way to detect the object. This is actually a new way of computing and instead of writing the code manually, people can let AI figures out the code for them, Buck noted.

At Computex 2017, NVIDIA announced partnerships with Taiwan-based server players including Foxconn Electronics (Hon Hai Precision Industry), Inventec, Quanta Computer and Wistron to develop GPU-oriented datacenter products, aiming to fulfill market demand for AI cloud computing hardware.

NVIDIA will provide these server players early access to its HGX reference architecture based on Microsoft's Project Olympus initiative, Facebook's Big Basin systems and NVIDIA'sDGX-1 AL supercomputers, as well as its GPU computing technologies and design guidelines to help them develop GPU-accelerated systems for hyperscale data centers.

Buck pointed out that via the cooperation, server players can choose how they wish to design the layout of their datacenter server systems and the components they wish to adopt to create differentiation from each other and provide added value for their customers. NVIDIA's engineers will maintain close communication with these players to ensure they receive the support needed for hardware buildups, so the players are able to conduct development of datacenter server systems in the least amount of time and quickly release their products to market.

NVIDIA is fully aware that clients want to have special customization such as form factor and method of cooling, for their server products, and therefore is working with the ODMs to create products using NVIDIA'sHGX-1 architecture.

NVIDIA's vice president of Solutions Architecture and Engineering team Marc Hamilton also gave detail on NVIDIA's latest technology. He noted that the HGX reference design is created meant for the high-performance, efficiency and scaling requirements that cloud datacenter servers need and it will also serve as the basic architecture for ODMs to design their cloud computing datacenter servers.

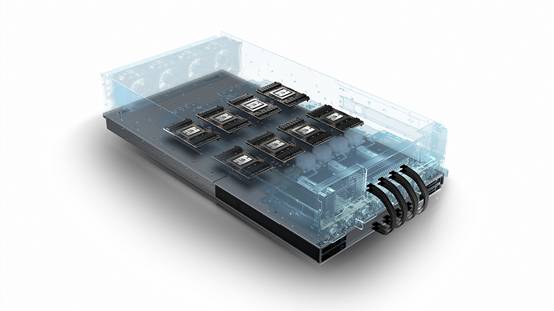

The standard HGX design architecture includes eight NVIDIA Tesla GPU accelerators in the SXM2 form factor and connected in a cube mesh using NVIDIA NVLink high-speed interconnects and optimized PCIe topologies. With a modular design, HGX enclosures are suited for deployment in existing data center racks across the globe, using hyperscale CPU nodes as needed, Hamilton added.

However, this is not the first time NVIDIA has established collaboration with server players. In early March, NVIDIA announced collaboration with Microsoft to push a new hyperscale GPU accelerator to drive AI cloud computing: The HGX-1 hyperscale GPU accelerator, an open-source design released in conjunction with Microsoft's Project Olympus.

The new architecture is designed to meet the demand for AI computing in the cloud – in fields such as autonomous driving, personalized healthcare, human voice recognition, data and video analytics, and molecular simulations. It is powered by eight NVIDIA Tesla P100 GPUs in each chassis, as well as NVIDIA NVLink interconnect technology and the PCIe standard – enabling a CPU to dynamically connect to any number of GPUs. This allows cloud service providers that standardize on the HGX-1 infrastructure to offer customers a range of CPU and GPU machine instance configurations.

NVIDIA believes that cloud workloads are growing more diverse and complex than ever, and the highly modular design of the HGX-1 allows for optimal performance no matter the workload. It also provides up to 100 times faster deep learning performance compared with legacy CPU-based servers, and is estimated to deliver one-fifth the cost for conducting AI training and one-tenth the cost for AI inferencing.

With its flexibility to work with data centers across the globe, HGX-1 offers existing hyperscale data centers a quick, simple path to be ready for AI.

Buck pointed out that NVIDIA currently has two major areas where it can contribute in the AI market. One is in the datacenter, a lot of end devices actually need to communicate back to datacenters to get answers and NVIDIA has been focusing on developing AI technologies and training the neural network to learn new things. NVIDIA also works with its partners to develop software and hardware and is supplying kits including Cuda, cuDNN and TensorRT, which leading frameworks and applications can easily tap into, to accelerate AI and research.

The second area is the GPU used in devices. An example is autonomous driving system. Since a car cannot only rely on datacenter to drive, equipping a super computer into car is a necessary work for the application. NVIDIA has several solutions including Jetson embedded platform and GPUs that are used for datacenter, can be adopted into the super computer.

Microsoft, NVIDIA and Ingrasys, a Foxconn subsidiary, collaborated to architect and design the HGX-1 platform, which they are sharing widely as part of Microsoft's Project Olympus contribution to the Open Compute Project, a consortium whose mission is to apply the benefits of open source to hardware, and rapidly increase the pace of innovation in, near and around the data center and beyond.

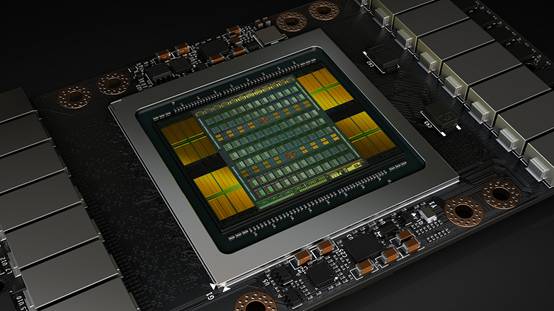

To further improve the HGX platform's performance, NVIDIA launched Volta, its new GPU computing architecture, created to drive the next wave of advancement in artificial intelligence and high-performance computing in early May at NVIDIA's GTC show. The first Volta-based processor, the NVIDIA Tesla V100 data center GPU, is able to bring extraordinary speed and scalability for AI inferencing and training, as well as for accelerating HPC and graphics workloads.

Hamilton noted that Volta, NVIDIA's seventh-generation GPU architecture, is built with 21 billion transistors and is able to output a performance that is equivalent to 100 CPUs in deep learning applications.

Volta provides a 5x improvement over Pascal, the previous-generation NVIDIAGPU architecture, in peak teraflops, and 15x over the Maxwell architecture, launched two years ago. This performance surpasses by 4x the improvements predicted by Moore's law.

Datacenters need to deliver exponentially greater processing power as these networks become more complex. And they need to efficiently scale to support the rapid adoption of highly accurate AI-based services, such as natural language virtual assistants, and personalized search and recommendation systems.

Volta will become the new standard for high performance computing. It offers a platform for HPC systems to excel at both computational science and data science for discovering insights. By pairing CUDA cores and the new Volta Tensor Core within a unified architecture, a single server with Tesla V100 GPUs can replace hundreds of commodity CPUs for traditional HPC.

Technologies include Tensor Cores designed to speed up AI workloads. The Tesla V100 GPU is equipped with 640 Tensor Cores to deliver 120 teraflops of deep learning performance, equivalent to the performance of 100 CPUs.

An improved NVLink provides the next generation of high-speed interconnect linking GPUs, and GPUs to CPUs, with up to two times the throughput of the prior generation NVLink.

Developed in collaboration with Samsung, the GPU supports 900GB/s HBM2 DRAM, allowing the architecture to achieve 50% more memory bandwidth than previous generation GPUs, essential to support the computing throughput of Volta.

Both NVIDIA Tesla P100 and V100 GPU accelerators are compatible with HGX. This allows for immediate upgrades of all HGX-based products from P100 to V100 GPUs.

With new NVIDIA Volta architecture-based GPUs offering three times the performance of its predecessor, ODMs can feed market demand with new products based on the latest NVIDIA technology available.

HGX is an ideal reference architecture for cloud providers seeking to host the new NVIDIAGPU Cloud platform. The NVIDIAGPU Cloud platform manages a catalog of fully integrated and optimized deep learning framework containers, including Caffe2, Cognitive Toolkit, MXNet and TensorFlow.

NVIDIA is seeing strong growth in AI applications across different channels, noted Buck adding that people are highly interested in hyperscale cloud, traditional OEM and AI-supported personal workstations. Demand from AI developers and researchers for high-end consumer-based PCs and notebooks has also been picking up.

Currently, all major public cloud computing service providers have adopted GPU-power datacenter servers for their calculation need and the driving force for such a trend is AI, Buck said.

This fact also corresponds to the latest market trend. In 2016, Taiwan's server revenues increased 4.8% on year, reaching NT$555.8 billion (US$18.6 billion) because of increased server demand worldwide. Revenues are estimated to grow to NT$588.6 billion in 2017 as demand from datacenter clients including Amazon, Google, Facebook and Microsoft is expected to rise further.

With demand for products featuring AI, such as robots, smart voice assistants, and autonomous driving solutions, continuing to see growth, GPU-oriented datacenter server shipments are expected to gain popularity in the industry.

Although many of the related applications are not yet fully mature, business opportunities have grown quickly over the past few years. With NVIDIA's close involvement and assistance, especially on the software side, AI is expected to become a new growth driver for the IT industry and a new business direction that Taiwan-based hardware players can venture into to seek new opportunities.

NVIDIA HGX reference architecture

NVIDIA Tesla V100